BaseFS

Basically Available, Soft-State, Eventually Consistent File Sytstem for P2P Cloud Management

Created by Marc Aymerich /

glic3rinu.github.io/basefs/presentation

Cloud Management

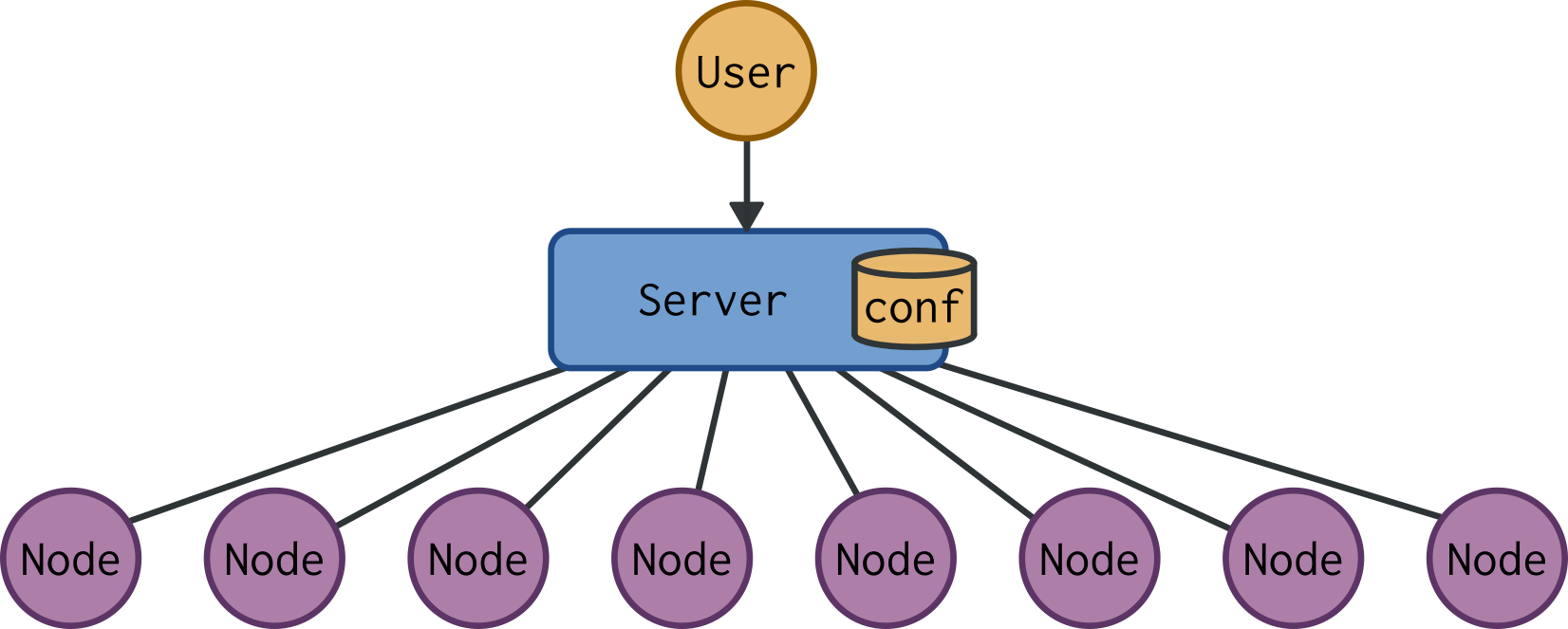

Client-Server Architecture

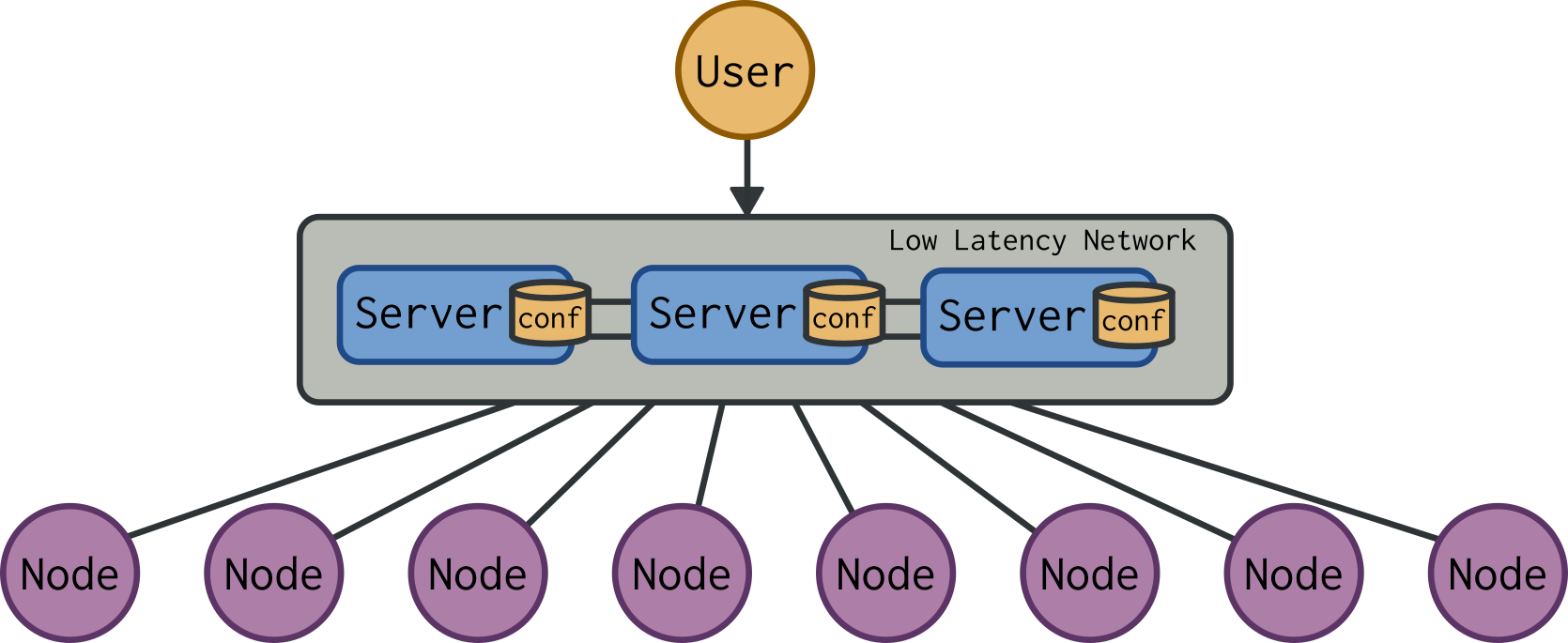

HA Client-Server Architecture

Traditional Cloud is

Centralized

Community Cloud is

Peer-to-peer

How can we do better

P2P management?

Consistency or Availability ...

... You must choose wisely

Eventual Consistency

- Available under partition:

No need for stable quorums - High performance:

Progress without coordination - Geographic and administrative scalability

No latency bottleneck, no trust between nodes - Weakly consistent communication channel:

Gossip-style network protocol, NO APIs - Nodes don't need to trust each other: Byzantine

failures on SC systems are practically untractable

BaseFS

BaseFS Under The Hood

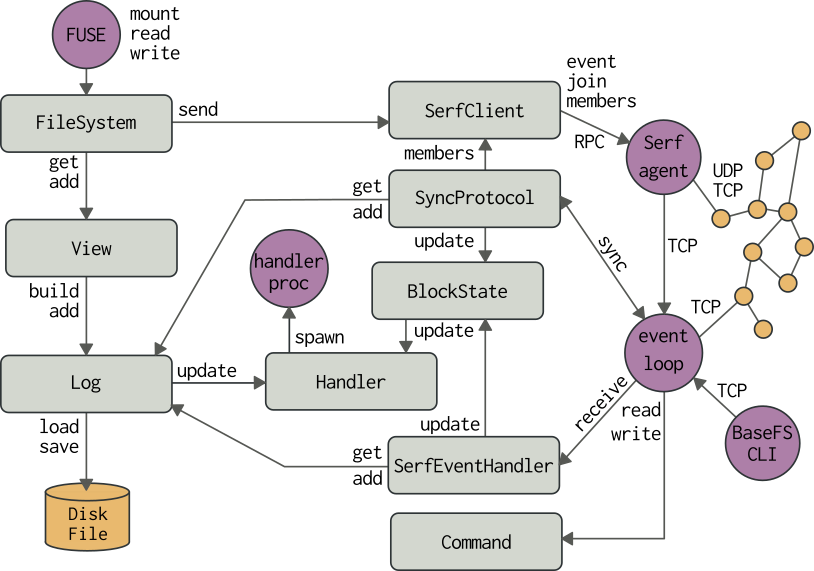

- Log - Specialized Merkle tree of inmutable entries

- View - Automatic conflict resolved composition of the log

- Gossip protocol - Membership and initial dissemination

- Synchronization protocol - Full state sync

- File system - Provides file system API for the view

Module Overview

Log

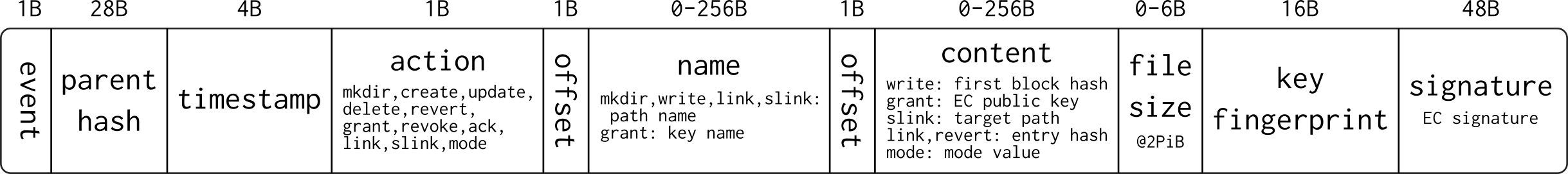

- Log entries - filesystem metadata "i-nodes"

- Log blocks - file content chunks

Log Entries

revoke, ack, link, slink, mode

Log Entries Properties

- Specialized Merkle Tree - Link Hashes

- Content addressing: uniquely identified by its hash

- Tamper resistance: hash verification

- Deduplication: objects with same hash are equal

- Casual ordering: object linked is older

- Convergent Replicated Data Type

- Associativity f(f(a, b), c) = f(a, f(b, c))

- Commutativity f(a, b) = f(b, a)

- Idempotency f(f(a)) = f(a)

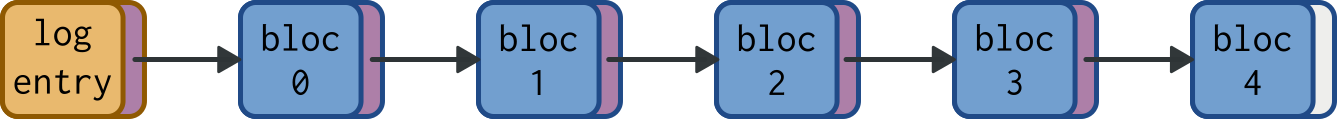

Log Blocks

- Hashed linked list

- Bsdiff4 patches in 483B chunks

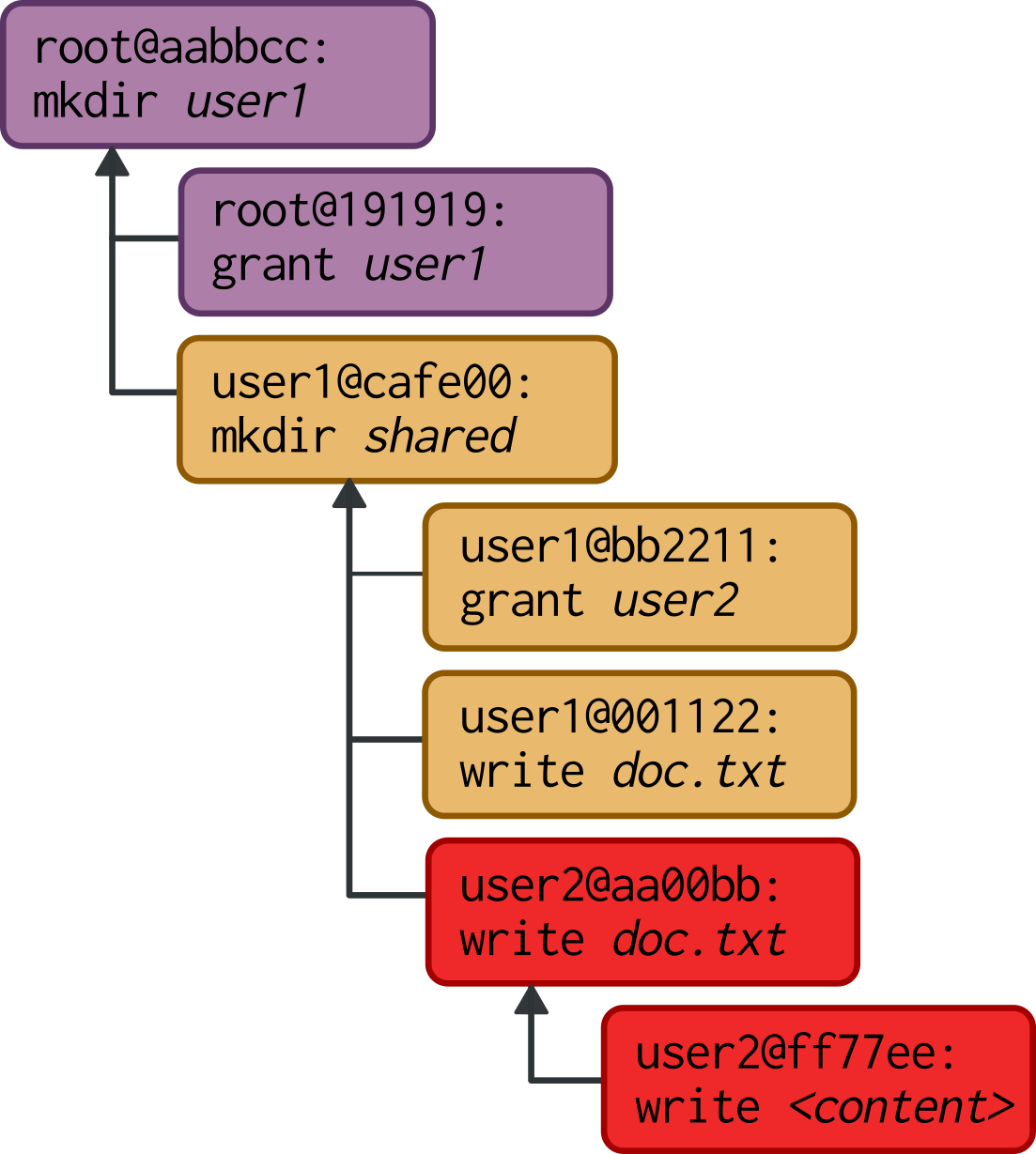

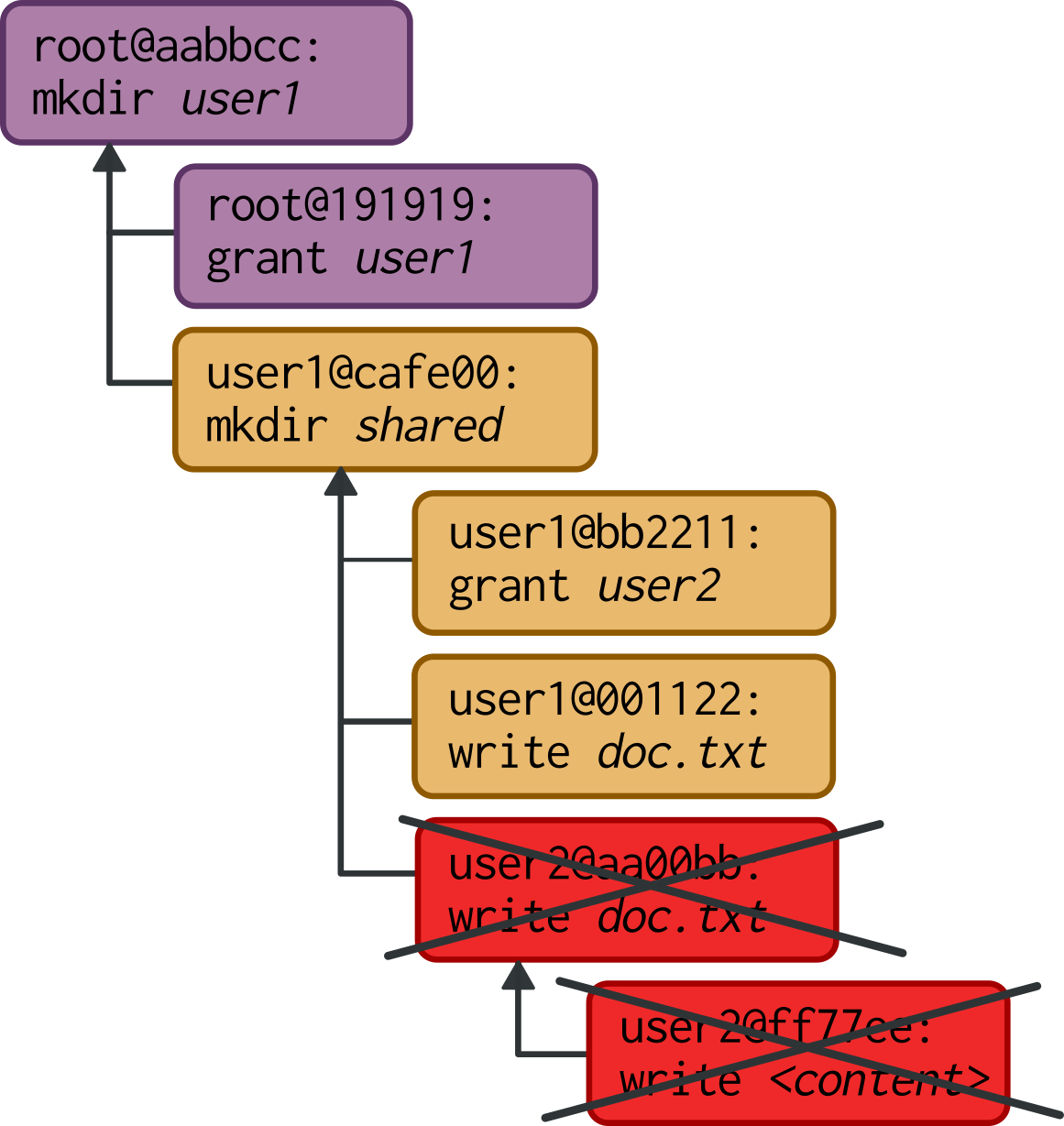

View

- Conflict resolved composition of the log

- Self-certified filesystem with write permissions

- Proof-of-authority:

- Higher hierarchy key branch

- If equal, more contributors branch

- If equal, higher root hash branch

View Example

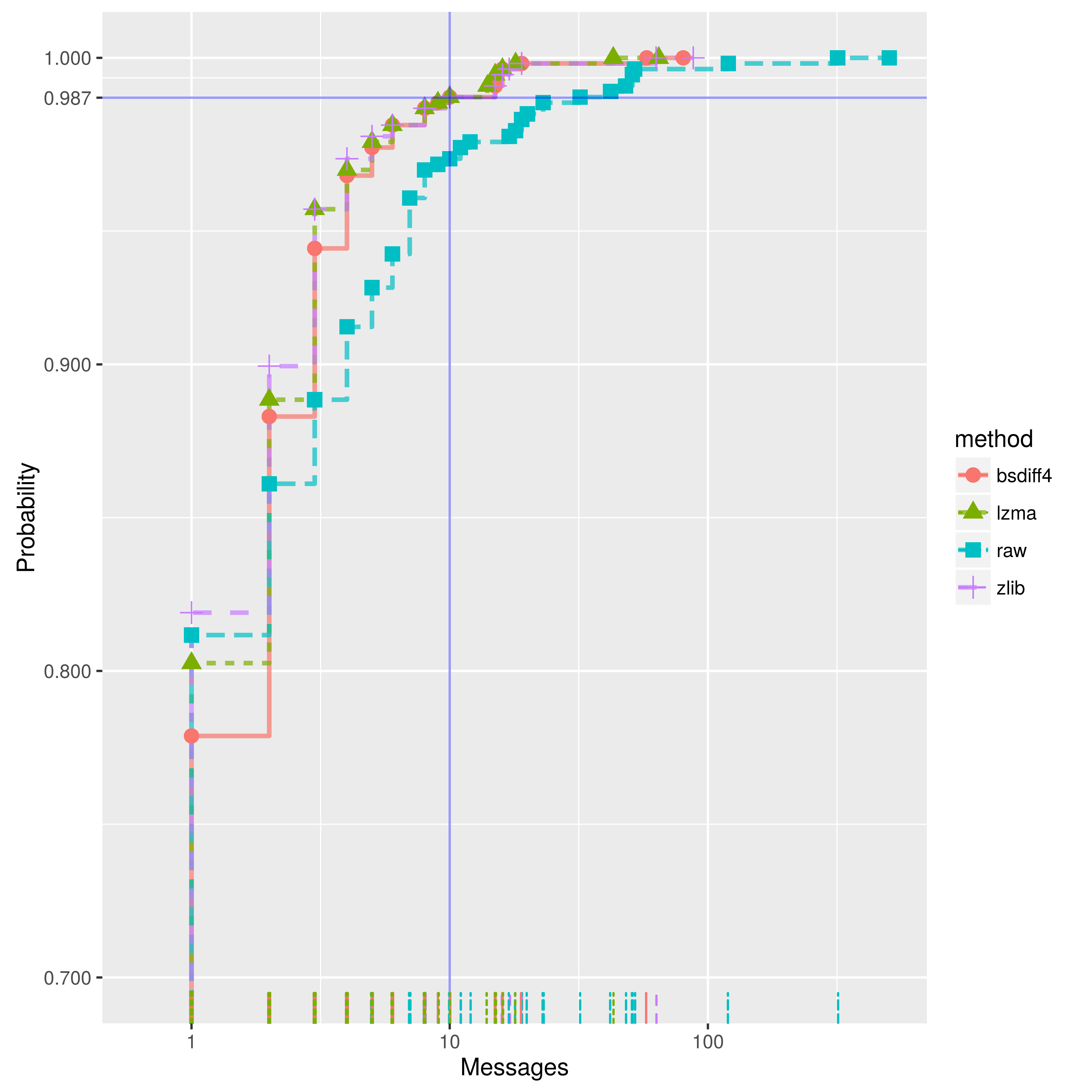

Gossip Protocol

- Implemented using Serf library

- Provides group membership and log disemination

- Uses Serf custom events payload (512 bytes)

/etc number of messages

Synchronization Protocol

- Log disemination

- after partition

- large files

- bootsrap joining nodes

- Unseen-biased randomized node selection

$$p_i = t_i/\sum_{j=1}^{1,n} t_j$$ - Efficient divergence detection with Merkle trees

File System API

Example:

Replace CONFINE controller and node software with BaseFS

Installation

$ pip3 install basefs

Bootstrap

# Create new key

$ basefs genkey

# Createw new filesystem

$ basefs bootstrap confine -i <ip>

# Mount

$ mkdir ~/confine

$ basefs mount confine ~/confine

Build Data model and handlers

Model CONFINE system with directories and files, considering that permissions are hierarchical.

/users/user1/info

/users/user1/auth_keys/key1

/groups/a/members/user1

/groups/a/nodes/node1

/groups/a/slices/slice1/node1Write handlers that execute on changes,

~/confine/handlers/confine-node.sh creates CONFINE slivers

Distribute

# Get the log from another machine

$ basefs get confine <ip>[:<port>]

$ mkdir ~/confine

$ basefs mount confine ~/confine

# CONFINE nodes should define the handler for creating VMs

$ basefs mount confine ~/confine \

--handler ~/confine/handlers/confine-node.shEvaluation

Parametrization

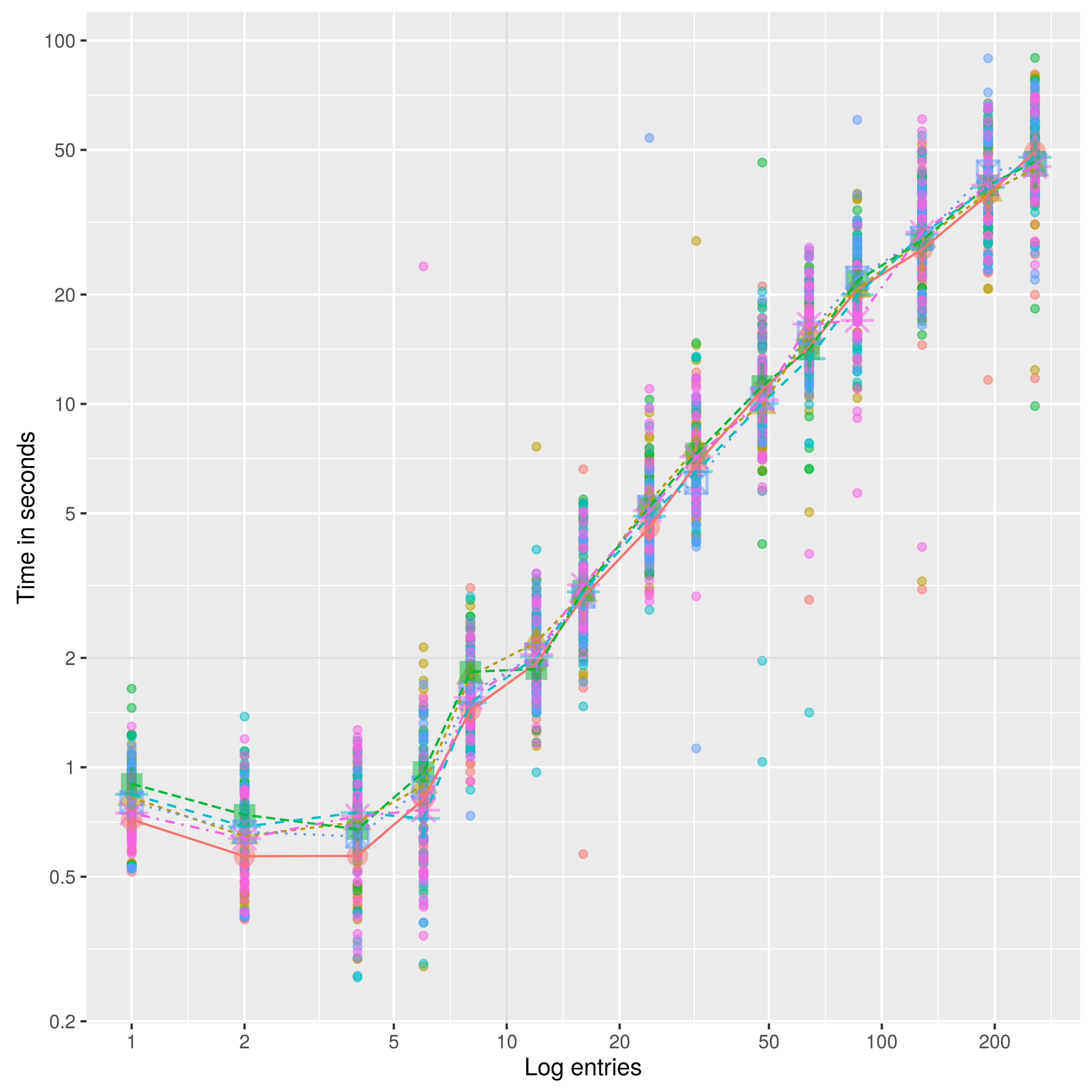

Param: Max Gossiped Blocks

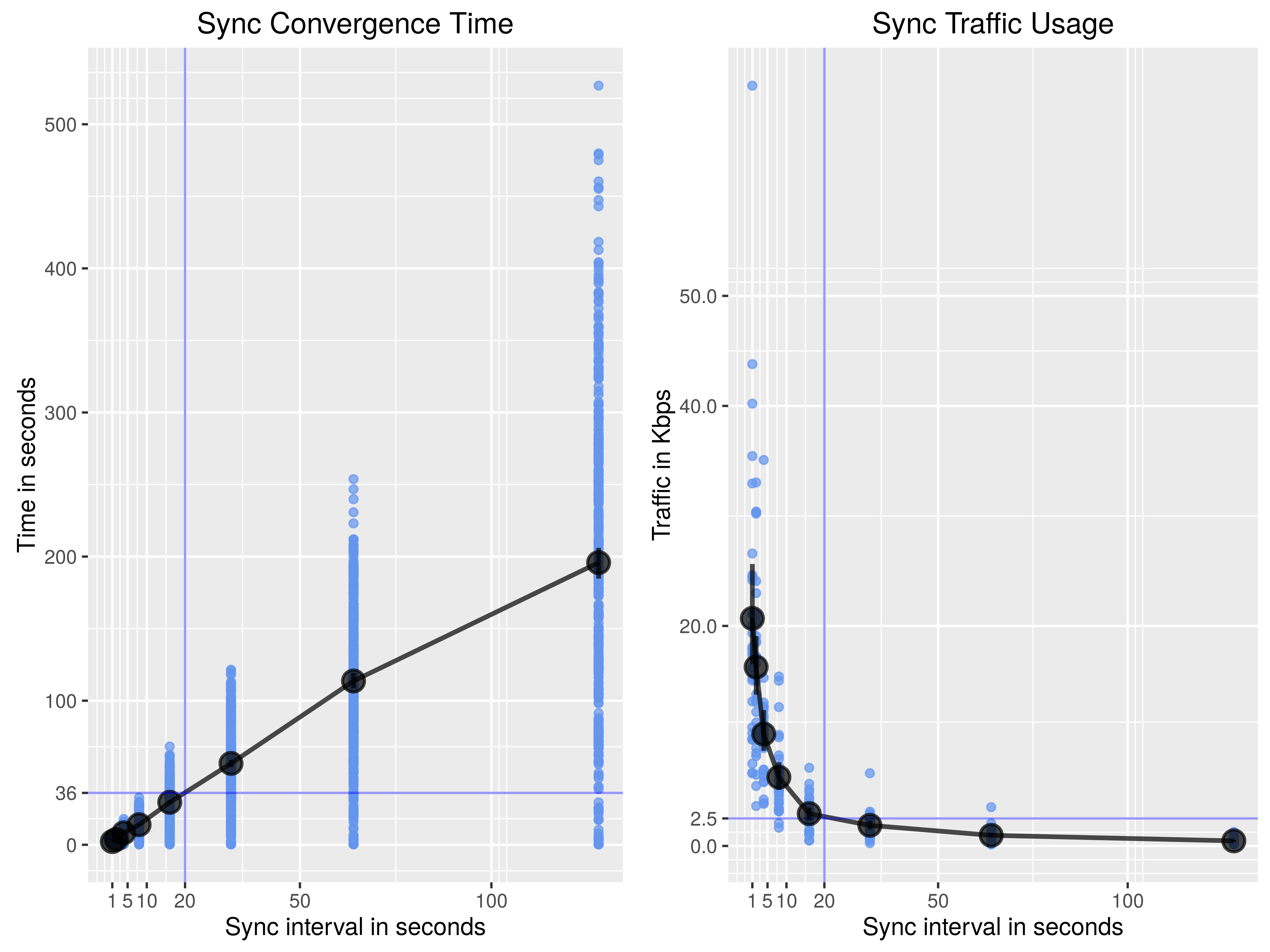

Param: Sync Protocol Interval

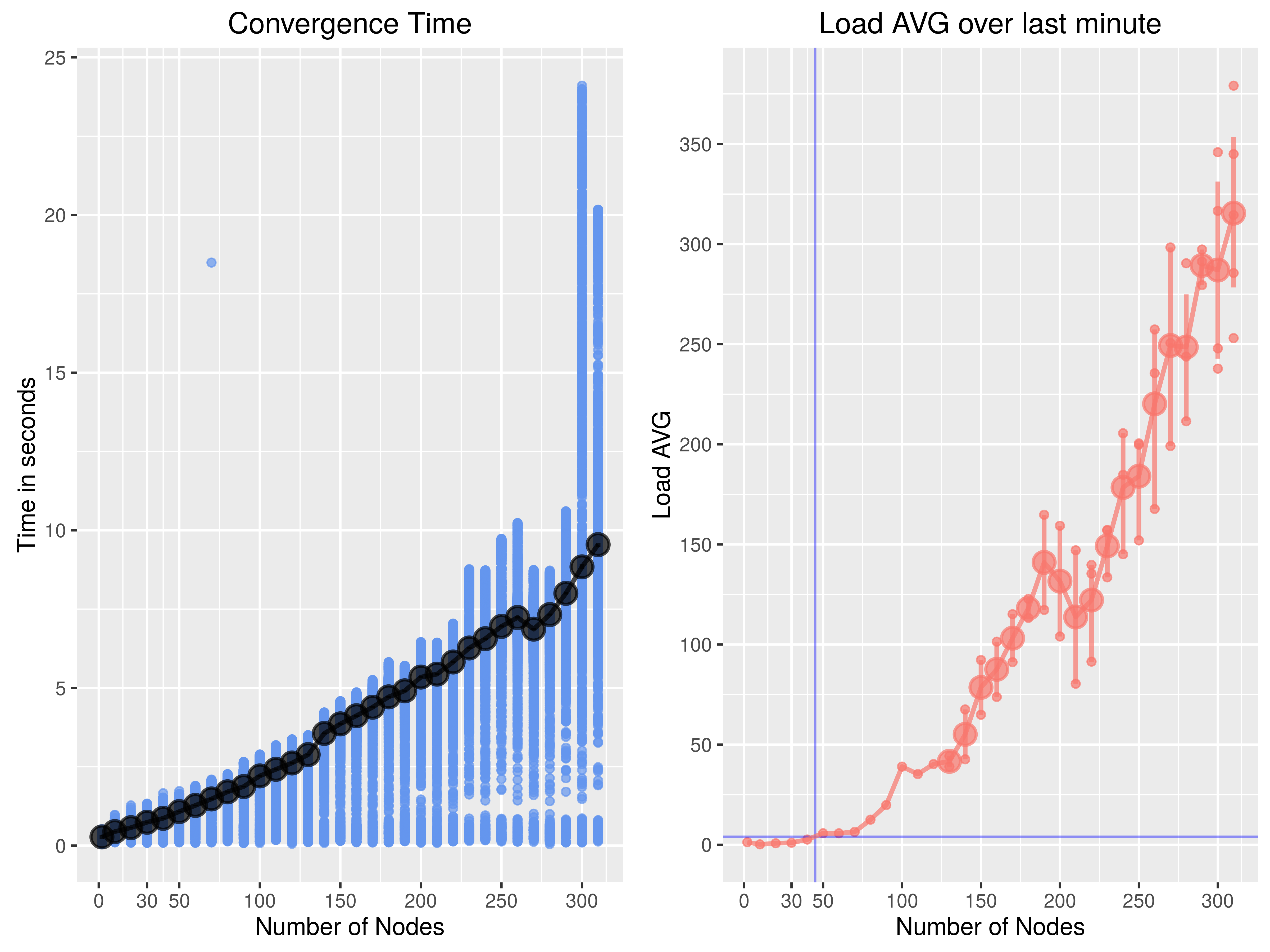

Param: Docker Cluster Size

Network Evaluation

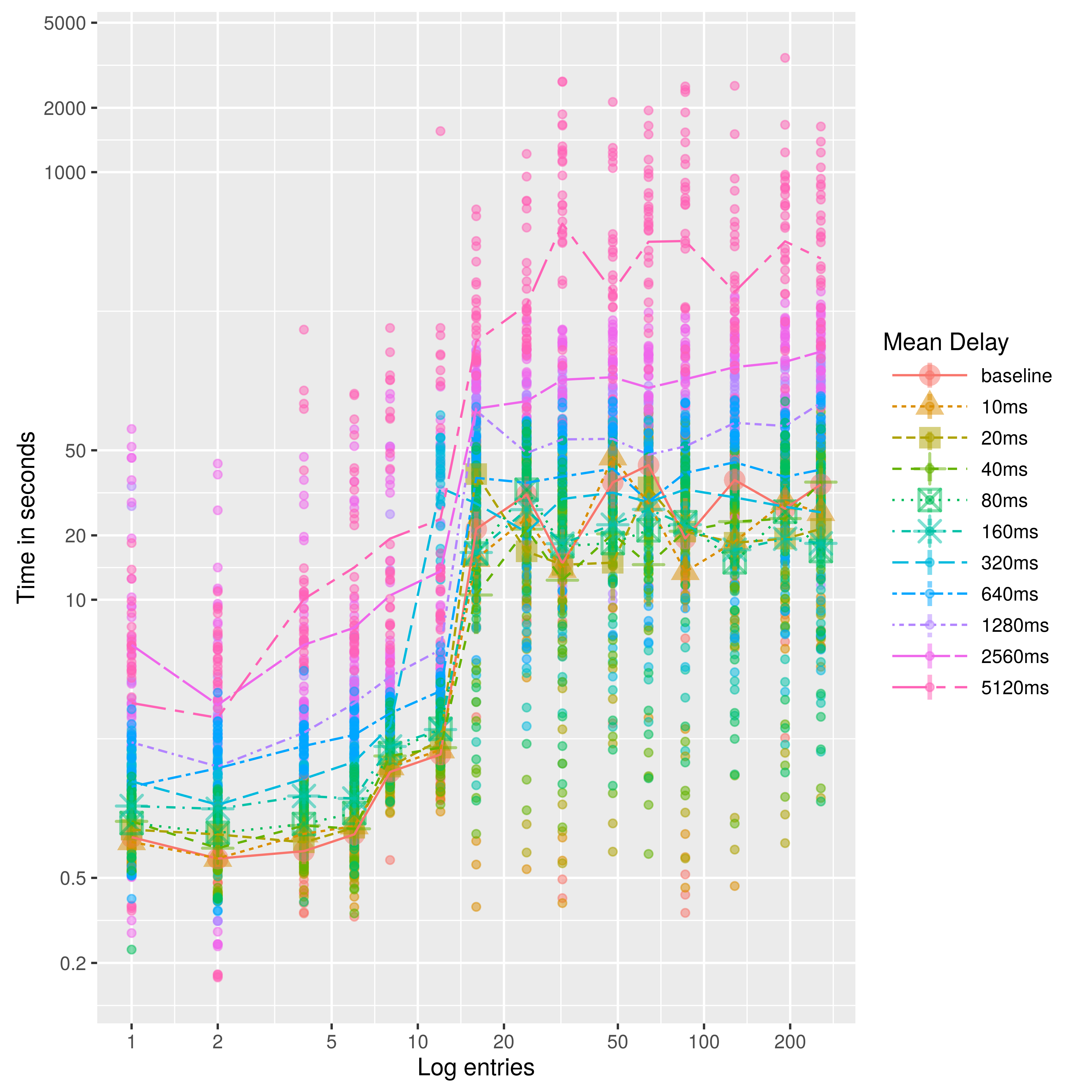

- How network characteristics (latency, packet loss and bandwidth) affect convergence time?

- Virtual Environment based on:

- 30 nodes cluster

- Write to one node and measure convergence time

Effect of Latency

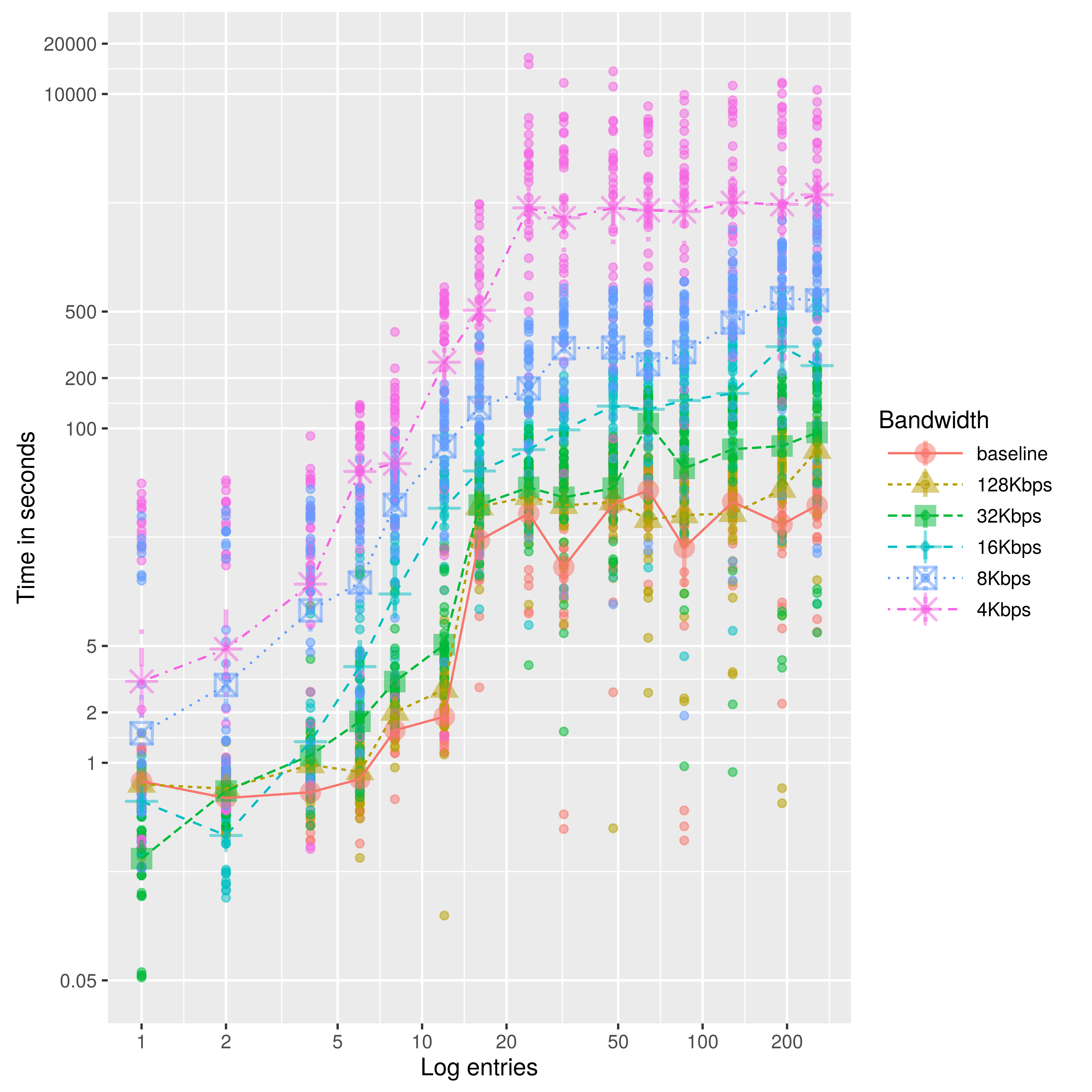

Effect of Bandwidth Limitation

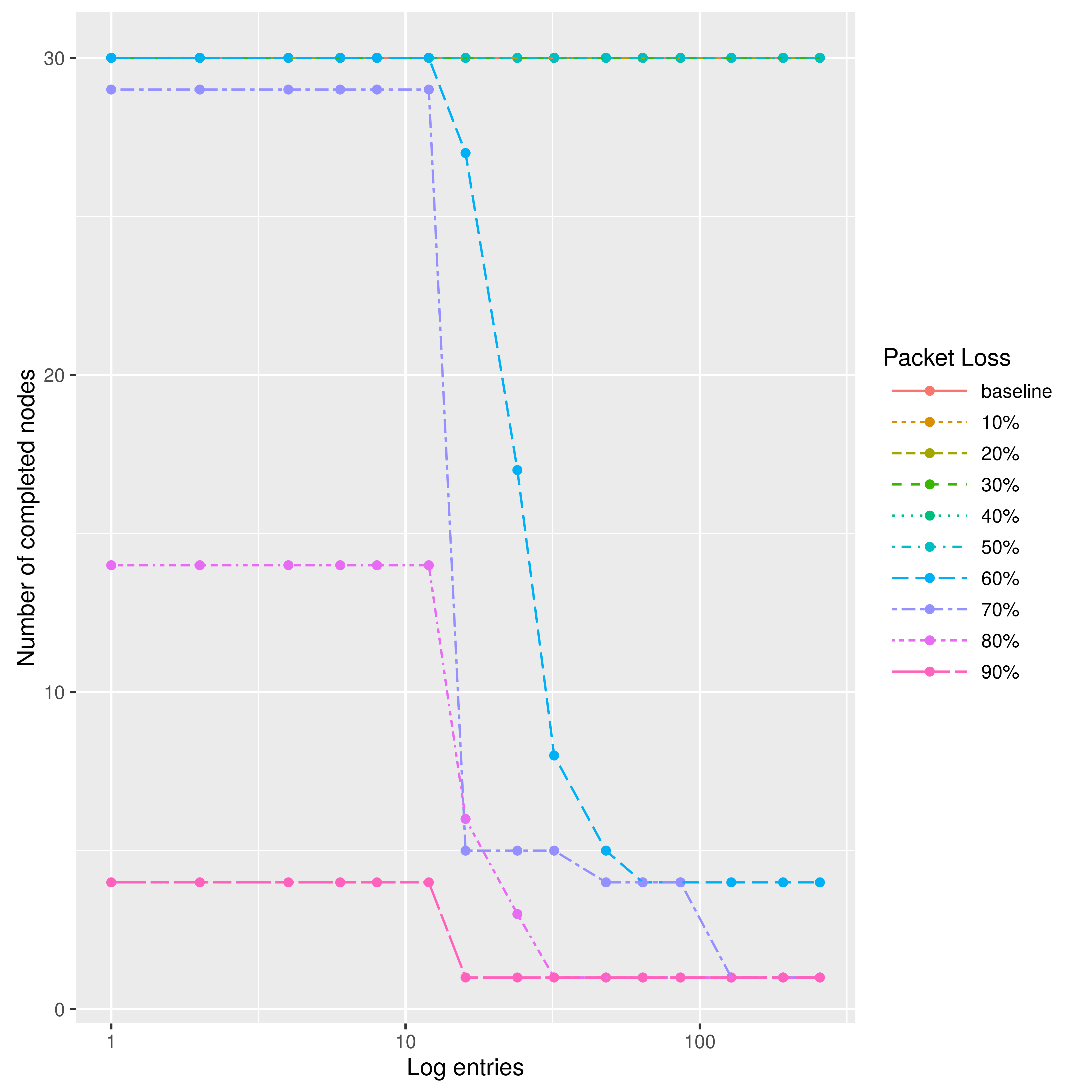

Effect of Packet Loss

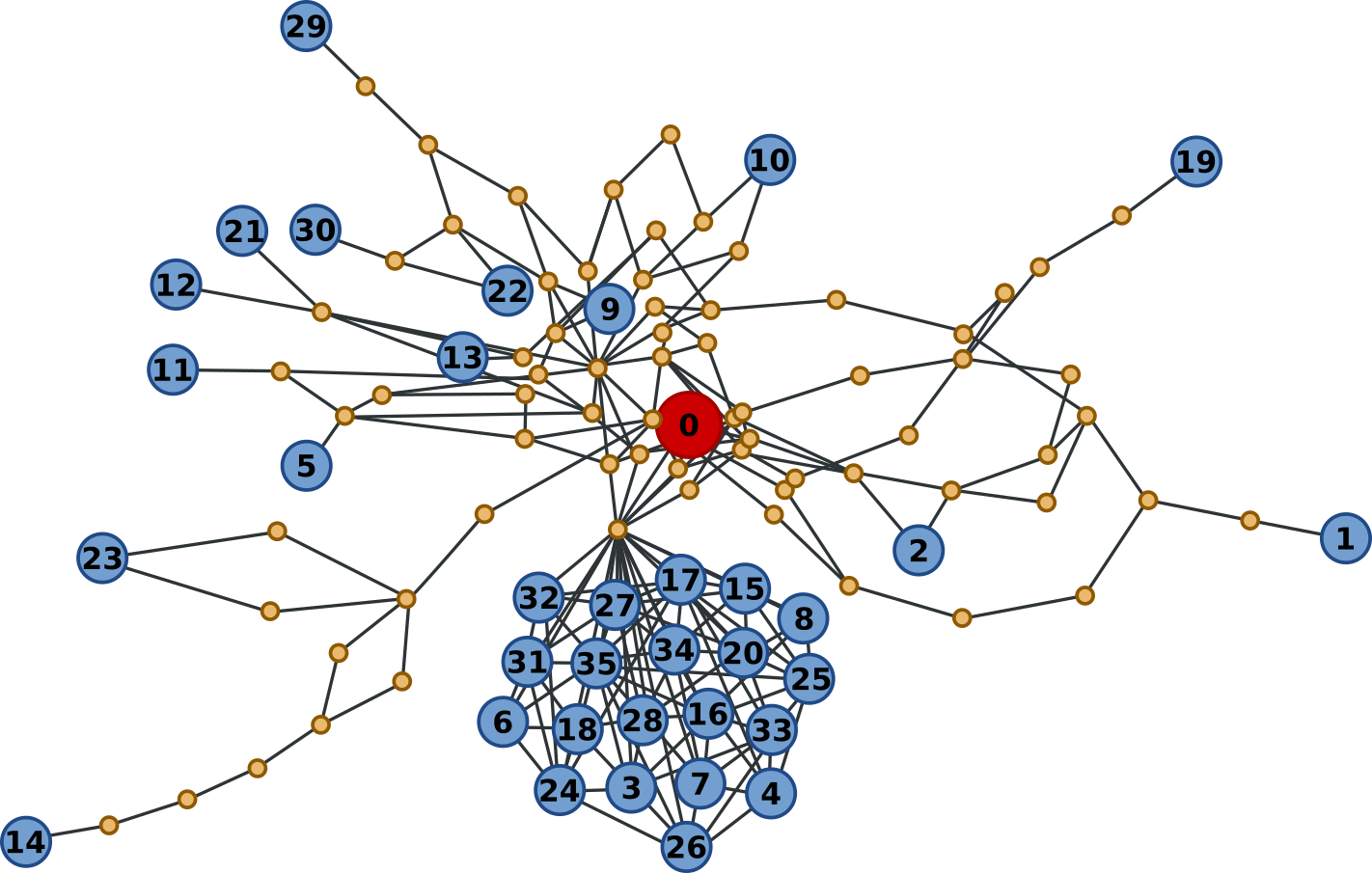

Community-Lab

- Community Network Testbed by the CONFINE project

- 36 node slice with public IPv4 connectivity

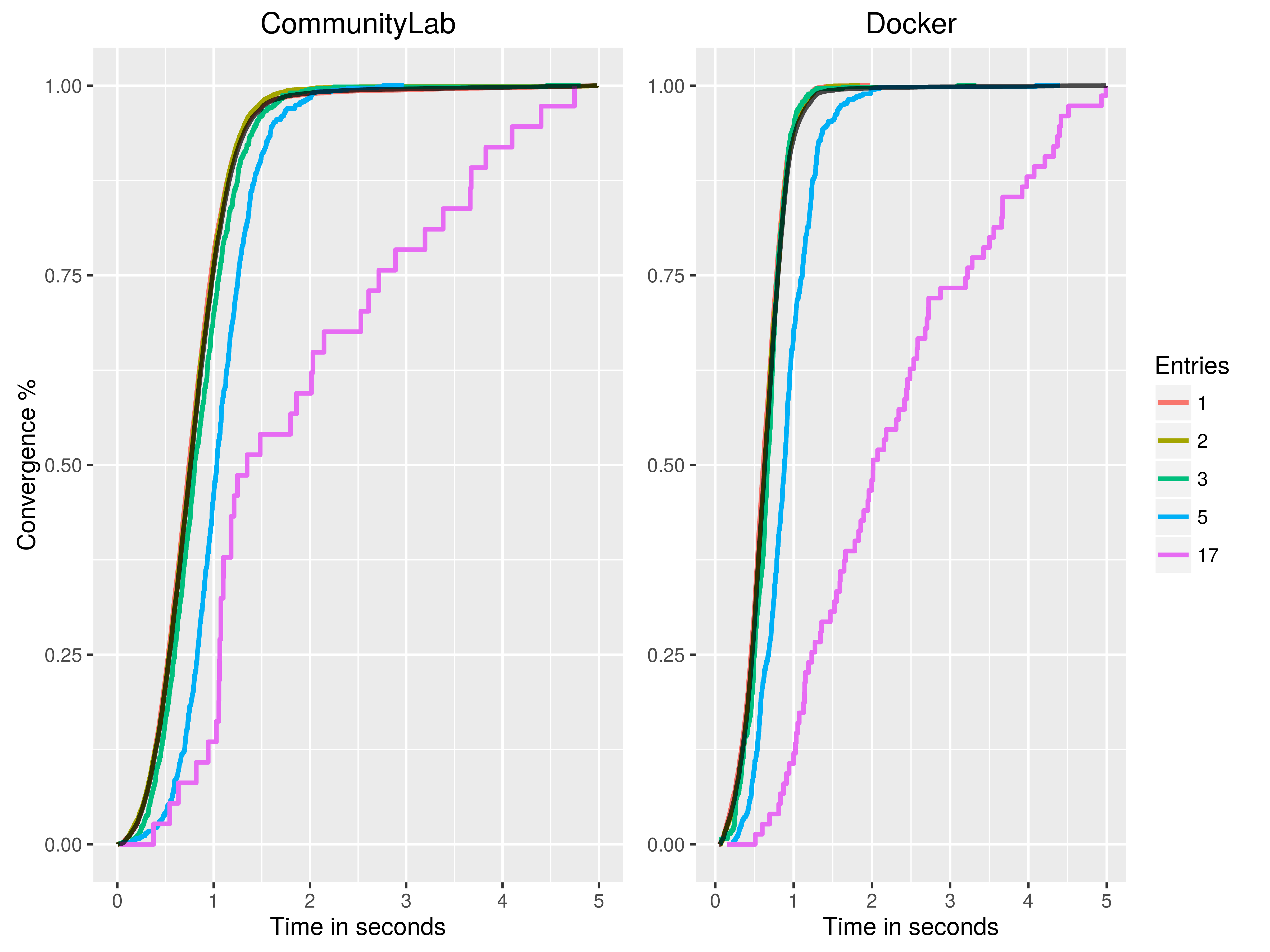

Community-Lab: Convergence

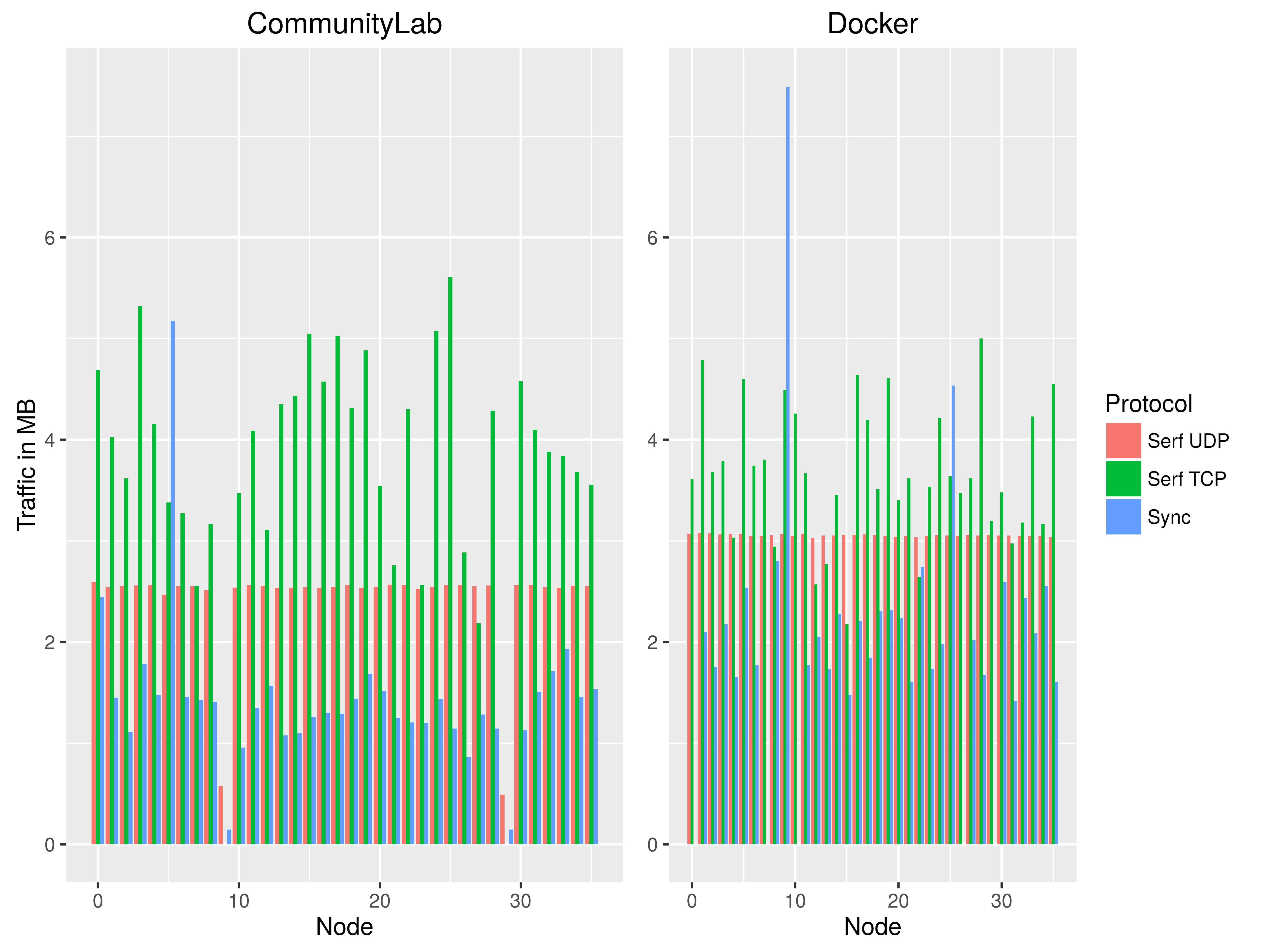

Com-Lab: Traffic distribution

File System IO Evaluation

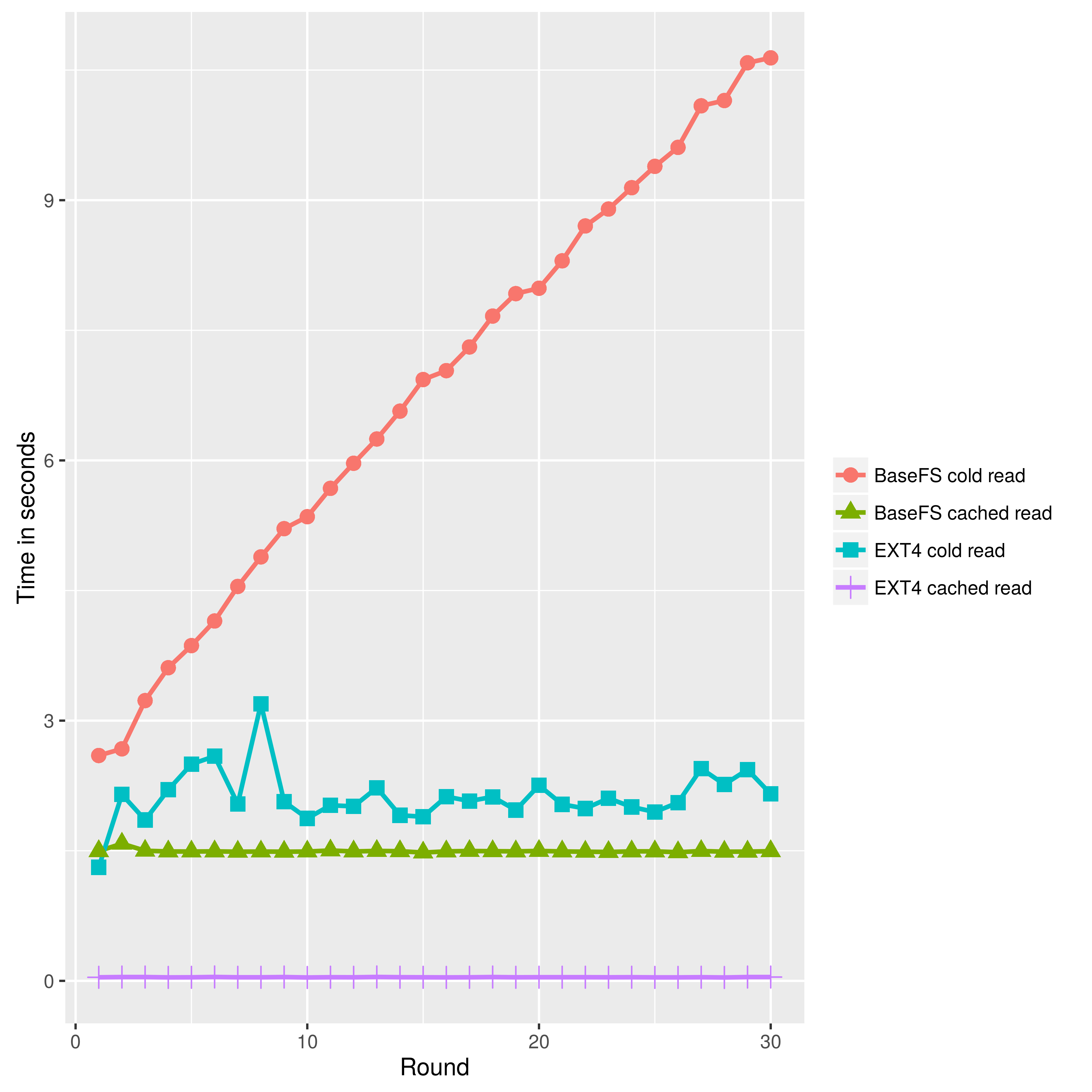

File System Read Performance

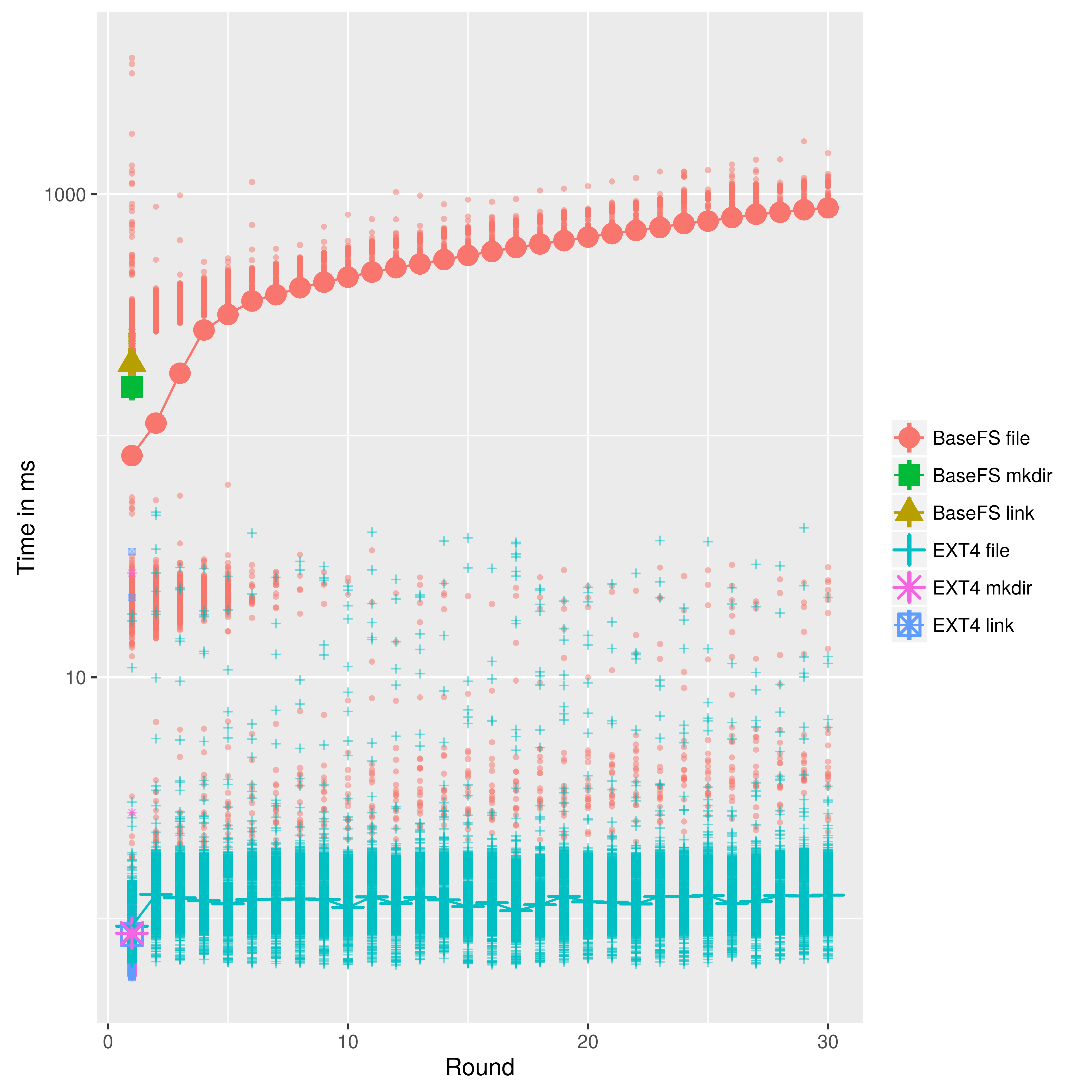

File System Write Performance

Plans for world domination:

As it is

- Community cloud configuration management

- Distributed version control system

- Distributed Dropbox-like applications

- Self-updatable documents (encyclopedia, discography)

Plans for world domination:

Better IO performance

BSDIFF4 and cPython are not the fastest

- System upgrade on distributed systems

- Shared in-memory database (memcached)

Plans for world domination:

Support for larger files

- Multiple encoding methods: BSDiff4 is optimized for small changes on small files

- Block manifest instead of block linked-list

- Block market with incentive mechanism

- Block garbage collection

- Mutable P2P file-sharing

- Generalized filesystem

Summary

- Strong consistency imposses strong constrains

- We have a lot to gain if we can relax consistency: scalability, availability and simplicity

- Cryptographic hashes are awesome!

- CvRDT Specialized Merkle tree is a powerfull data structure with useful guarantees

- Merkle trees allow for efficient synchronization

- Gossip protocols are efficient ways for group membership and state disemination in large weakly consistent systems

- Prototyping distributed filesystems is easy and fun with Python and FUSE